You are currently browsing the tag archive for the ‘JSCOOP’ tag.

SCOOP, or Simple Concurrent Object-Oriented Programming, is a model originally conceived for Eiffel to easily create parallel code. Eiffel has native support for rich types and pre-conditions, which has led to a design-by-contract paradigm in that programming language. The JSCOOP project that I am working on aims to bring the principles of design-by-contract and simple concurrency to Java.

The first thing we need to think about is the design-by-contract idea in Eiffel. How do we translate a model that builds off of this paradigm (explicit pre-conditions and post-conditions for any method)? JSCOOP takes it half of the way there by adding support for pre-conditions to any method. It looks like this:

@await(pre="!buffer.isEmpty()") public String consume(@separate Buffer buffer) { return buffer.take(); }

I’ll explain the separate annotation later, but let’s take a look at @await. Whenever this method is invoked, the system will enforce that the buffer is not empty before it allows the call to go through. Note that this does not mean that this method is called every time the buffer is empty. Note also that we haven’t gained any parallelism here, yet; we’ve just restricted ourselves.

Enter @separate. This simple keyword says, this is a reference to an object that runs on a different thread than this current code is running on (worth noting that it is certainly more complicated than that). When we execute a method on a separate object, we’re really telling that method to execute on the other thread, whenever it gets the chance to.

The concept of who executes these “remote” methods is called a Processor in JSCOOP. The processor has its own thread of execution and–most importantly–executes requests in the order that they are given. A processor can handle one or more objects. This means that we could have two separate Buffer objects both running on the same Processor, and thus only one of them would be executing at any given time.

Stepping back to consider this, we must understand then that the @separate annotation marks references that are potentially separate, rather than references that are definitely separate.

How does JSCOOP prevent concurrent access?

Consider a Producer-Consumer problem in which all Producers and Consumers share a reference to a common Buffer. Using the JSCOOP model, we store a @separate reference to the same Buffer in each of the Producer/Consumer instances. But surely when we run the Producers/Consumers on separate threads, we’ll concurrently modify the buffer, leading to classic problems.

We introduce the concept now of locking a Processor. Whenever a @separate reference is declared as the parameter of a function (as in the consume example above), we lock the Processor that owns the object referenced in the parameter. Locking a processor says, “only I can add remote calls to this processor’s queue”. What it means is that we’re not actually executing buffer.take() immediately; we’re putting it onto the run queue of buffer’s Processor (its handler). In this case, we require the result of buffer.take(), so we must wait for the call to finish, but that isn’t always the case (this concept called wait-by-necessity). After adding all of the calls in the method to the queue, and waiting for whichever ones we require, we can unlock the processor, allowing other methods that need it (read: have a @separate parameter whose reference is handled by the same Processor) to have their requests go through.

Thus, concurrent access is prevented in the Processor. Because it has a run queue, it waits to execute the next method when it can, according to locking and pre-conditions. This is guaranteed to be safe because each object has only one handling Processor.

That’s all for now

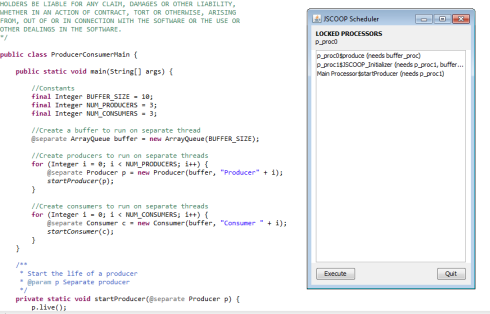

There’s a lot more to the system, but the above serves as a good first step for programmers familiar with concurrency issues in understanding how to program with JSCOOP. Specifically, there is a Scheduler for the entire system that I haven’t mentioned; this checks preconditions, manages Processors, and ensures fairness.

To trace a deadlock, I put my head down for 3 hours, talked to no-one, grunted a few times, and then let out a scream of success when I had built a drop-in replacement for the JSCOOP scheduler that was written before I started the project. Thanks, CSC301, for making me learn Swing (and sorry to everyone else who works in BA2270 for my unnecessary enthusiasm yesterday).

JSCOOP code and manual scheduler

I’m working at the University of Toronto this summer, on a research project called JSCOOP. We’re bringing the SCOOP concurrency model to Java, by way of an Eclipse plugin and Java 1.5 annotations. It’s really, really cool stuff that started at York University and moved down here. I’m working under Marsha Chechik with Faraz Torshizi.

I’m also continuing the Cowichan parallel survey with Andrew Borzenko, under Greg Wilson. Last term we successfully implemented the problem set using Boost::MPI and Threading Building Blocks (TBB). So that’s two parallel paradigms down, and many, many more to go. This is what we’re up to this term:

- Tightening up the serial implementation. There’s a lot to be said for having a strong base to start from, and having the fastest serial implementation we can produce will be important for performance comparison.

- Standardizing the header files. As we’re only looking at implementing the problem set in C++ using libraries available to it at the moment, we’re defining a standard interface for the problems so that they can be called/chained in an abstract fashion. This also lets us put the performance evaluation code in one place.

- Uploading what we have so far. By the end of the term, we want our work to be available in a publically viewable location, complete with repository. We have that right now, to some extent, but clean-up must occur before we can actually “publish”.

- Implement with 2 more parallel systems. I’m using a product called LinuxTuples and Andrew’s using OpenMP.

I’m finding tuple spaces to be really interesting, and very theoretically pleasing. The performance seems to be decent too, and it seems like every problem fits into a nice box. More on that later.

Eve has, as one can probably tell from the sorry state of this page, come to a standstill. I’ll need to re-evaluate and make some less lofty (read: attainable) goals and an actual roadmap. When I get time to work on it, I’ll post more.